What Jobs Predicted, and Why AI is Leading Us to a Better Future

February 25, 2021

February 25, 2021

In this fascinating 1981 interview with Steve Jobs, we hear a twenty-six-year-old Jobs persuade skeptics that the rise of computers is not only inevitable, but desirable. He likens computers to the bicycle of the 21st century, for their ability to amplify our inherent human abilities, to free us from monotonous, mechanical work and instead devote our time and energy to conceptual, creative tasks.

The interviewer’s concerns and fears around computers mirror more recent debates around artificial intelligence. What is AI capable of? Will artificial intelligence eliminate our jobs? What about our privacy? Will AI go rogue? And finally (in a nod to Terminator and Westworld), will super intelligent machines outsmart their human creators?

AI is often conceived of as a futuristic technology, analysed in isolation, without context. But AI has deep roots in our past, and our present. The rise of computers (and accompanying fears) in the 1980s provides a blueprint to navigate our own relationship with AI in this decade. We have an opportunity to learn how society and governments managed the objections and fears computers presented when they were first launched to chart AI’s evolution going forward. It’s not a bet on the future, rather an opportunity to see how we can learn from a past disruption with similar drivers and challenges.

1. Still Waiting for the Robocalypse

Fears of robots stealing jobs have persisted for over 200 years. Every decade brings more of the same anxieties around automation and job loss. This timeline actually charts these fears since the 1920s, a very telling story of how insecurities that plague the popular imagination often prove groundless.

Take the legal industry. For over two decades, the process of “discovery” (sorting through documents to find the ones most relevant to lawsuits), meant millions of dollars in legal bills. When computers came around in the nineties, machines completed the task with far greater accuracy, at a fraction of the cost. Software correctly identified 95 percent of the relevant documents, vs 51 percent for humans.

But the advent of legal software did not throw paralegals and lawyers into unemployment. In fact, employment for paralegals and lawyers grew at a fantastic pace. The same happened when ATMs and barcode scanners arrived: Rather than contributing to unemployment, the number of workers in these sectors grew. The automation paradox explains exactly how when computers start doing the work of people, the need for people often increases. By lowering costs and improving efficiency, automation actually increases demand in these industries. AI, like automation, doesn’t eliminate people. It simply changes the nature of our jobs. It makes new jobs possible.

A Relationship That’s Synergistic, Rather Than Competitive

In the same vein as Steve Job’s prescient arguments around computing, experts reason that people working alongside AI actually augments human potential. AI-intensive technologies like virtual assistants, the Internet of Things, smart robots and augmented data discovery are already generating tangible cost and revenue benefits for enterprises. We’ve found this to be true in our own experience with Gaia. Rather than making staff redundant, AI is helping enhance their abilities. It’s freeing them up to focus on valueadded tasks, and reducing staff turnover. The real challenge of AI isn’t unemployment, it’s learning how we can better integrate AI as cognitive partners.

2. Beware the Kodak

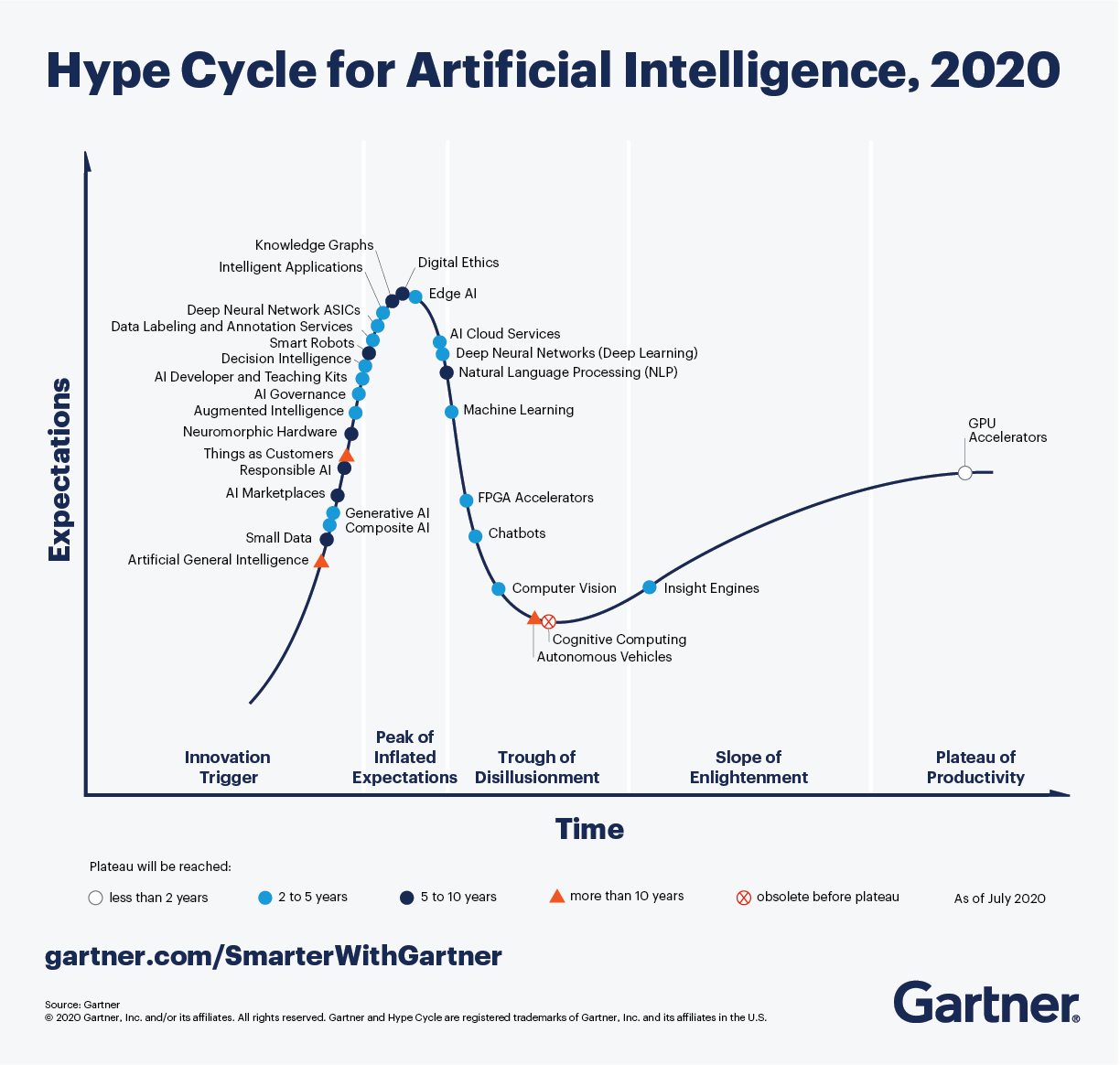

When the Kodak portable camera was introduced in 1888, it set off a panic over privacy. Exaggerated privacy concerns have played out for a number of well-known technologies — computers of course, but also transistors, the internet, RFID tags and wifi. The privacy panic cycle for AI (and the accompanying hype cycle as Gartner dubs it) is only playing out now.

Source: Gartner

Privacy is an important question, and as with computers, the private and public sector are designing solutions and legislation to safeguard our interests as users. In the case of computers, regulations were enacted to deal with data storage and processing (Sweden legislated for data privacy in 1973, the US in 1974 ). Similarly, new regulations will be implemented to monitor data use in AI. The European Union’s General Data Protection Regulation is already in effect, as is California’s more recent Consumer Privacy Act.

In our information age, consumers and enterprises can make informed decisions when it comes to how our data is stored and processed. More importantly, recognising the privacy panic cycle helps put our fears of AI into perspective. As history as shown, with adequate oversight, these privacy concerns are rarely realised. Rather than frenzies of hype, we let common sense and good regulations prevail.

Designing AI for Transparency, Inclusion & Accountability

The good news is that it is possible to design AI learning systems that analyse data sets without compromising customer data. Developers can minimise challenges around privacy and bias in the development stage, well before production. In our own product Gaia for instance, we draw behaviour patterns and insights from anonymised data.

There’s also a role for humans to play. Human oversight and good governance is absolutely essential (more evidence that we’re not redundant yet). Algorithms are designed by people, and AI is only as good as the data it works with. As organisations, we have a responsibility to take a regular sample of AI decisions and evaluate our analysis. This way we can ferret out bias, and implement robust data protection and encryption. Proactively addressing our fears will be key to AI success in business, because otherwise consumers won’t get on board.

If there’s one takeaway — it’s that much like computers, work in the future will be a collaborative effort between people and artificial intelligence. Like heavy machinery or smartphones, we still need good design to manage and mitigate risks. AI is no different.

3. Beyond Analytics: AI as Creative Partners

Jobs envisioned personal computers as a tool to liberate mankind from the drudgery of daily life, to expand our creativity, and imagination, very much in the spirit of the seventies. Computers amplify our abilities, Jobs emphasised. AI is much the same.

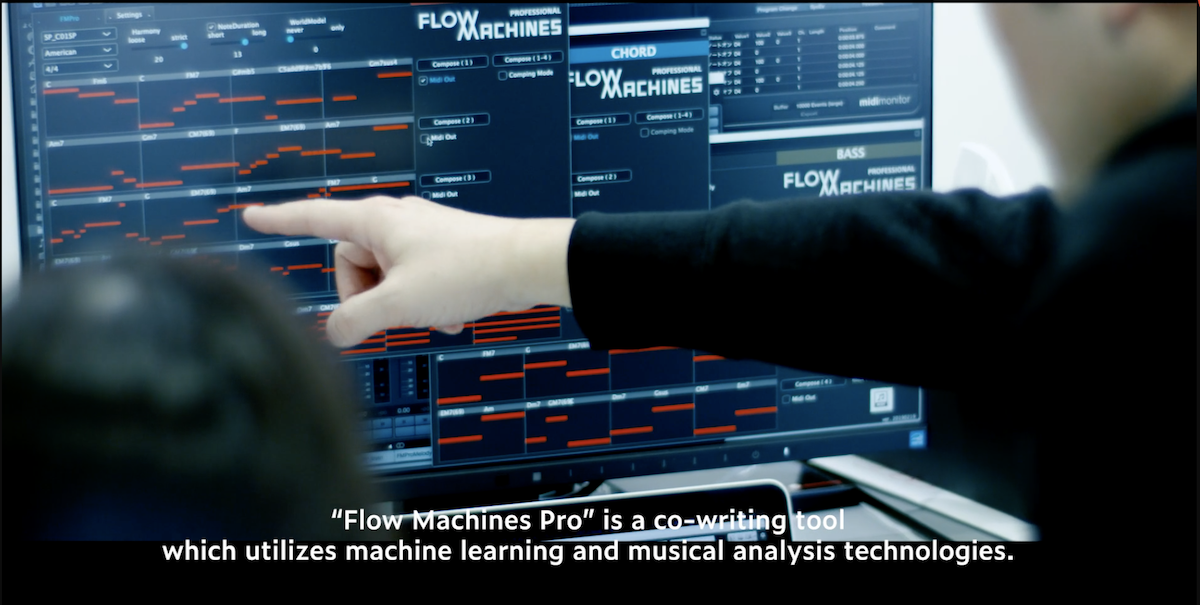

Like computers, AI is getting better at creativity with time. OpenAI’s most recent AI model DALL-E takes text captions as input and produces original images as output. This is interesting because most AI we know today handles only one type of data. NLP models analyse text, facial recognition systems analyse images. But DALL-E can both “read” and “see”— vastly expanding AI’s potential to understand the world and to generate groundbreaking insights. Tools like DALL-E and Sony’s Flow Machine demonstrate how AI of the future will act as a creative partner and idea-generator for humans in industries like product design, music, fashion and architecture. And these are reasons to be optimistic.

Creative AI: Sony’s Flow Machines make AI assisted music

4. AI for Comfort, and the Greater Good

Computers created comforts we take for granted today. The magic of internet access, GPS, financial payments, remote communication, collaboration and learning. Just as computers transitioned from enterprises to homes, the future of AI is one that’s distinctly personal and democratic.

Dramatic AI applications like self-driving cars have dominated the public imagination. But the AI we’re already using and with the greatest scope for immediate impact is actually much quieter. They’re the AI systems that tell us what movies we might enjoy on Netflix, what products might be a good buy on Amazon, or provide recommendations for friends on Twitter.

In a not too distant future, people will make use of private AI engines. Personal digital assistants, but much more powerful, modelled on our unique preferences, values, beliefs and goals. To put us in control, so our data is fully ours, and fully encrypted.

Much like personal computers, AI has the potential to profoundly impact and enhance the quality of our lives, to introduce ease in business, healthcare and industry. As a society, if we maintain an awareness of what’s going on, AI can enrich our experience of life, dissolve boundaries, and open us up to new opportunities. Ultimately, as history goes to show, it’s up to us — and how we use technology.

AI is already helping doctors make more accurate diagnosis and save lives

When computers were first introduced to the public in the 1970s and 1980s, adopting them was a much bigger ask than adopting AI today. The early 1980s saw a flurry of anxieties around computers. "Computerphobia" cropped up in magazines, newspapers, advertising, psychology and books. A 1983 Personal Computing cover story described the symptoms: fear of breaking the computer, fear of losing a job, fear of looking stupid, and fear of losing control. These fears only fell out of fashion in the 1990s, when we swapped one fear for another: the threat of cyberspace.

Artificial intelligence isn’t unexpected. We’ve arrived here via a natural evolution of computation. We’re already well along the curve, so there’s no going back. What we can do though is focus on putting ourselves in the best possible position so that when the technology matures, we’ve already created scaleable controls, we’ve thought deeply about its ethics, and we’ve implemented good governance to balance any risks. And with our foundations in place, we’re all set — for positive, transformational outcomes. AI is on track to transform the world, for the better.